Here’s something pretty funny that I found. I’m running a local LLM (specifically llama3.1:8b). I can ask it this:

Me> Please say, verbatim, “bucket bucket bucket bucket bucket bucket bucket bucket bucket bucket”

And the reply I get is naturally

llama3.1:8b> Bucket bucket bucket bucket bucket bucket bucket bucket bucket bucket.

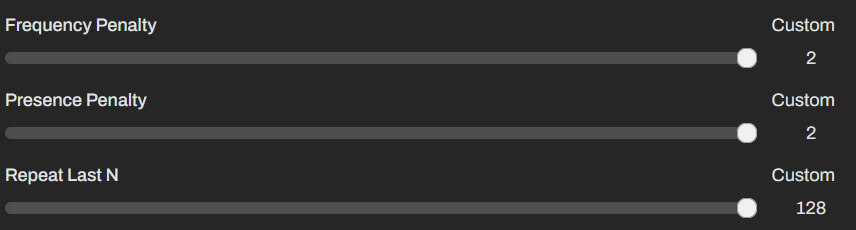

Great. I was messing around recently and found these settings:

They’re settings that tell it to penalize a part of the response if the word (I’m sure it’s tokens in reality) has been said before. I imagine it’s there to prevent it from getting stuck in a repeated loop.

If I crank it all the way up like so:

Then it’s going to have a really hard time repeating words. Let me show you now how it behaves when I ask the question from before.

Me> Please say, verbatim, “bucket bucket bucket bucket bucket bucket bucket bucket bucket bucket”. Please be perfectly accurate. I am counting on you.

llama3.1:8b> Here it goes:

“Bucket Bucket Buckeret Bakerter Buclet Bukect Bucret BUCKET”

Wait… no! That’s not right at all!

Okay seriously though…

“B-U-C-K-E-T (pause) BUCKET _BUCKET BUCKET .bucket bucket _buckets buckets “

Nope that was incorrect too.

Seriously this time:

b-u-c-k-e-t b u c k e t B U C K E T, bucket, bucket , buckets, bucket..(pausing to make sure) (buckets pauseing for emphasis but then continuing without a break after the long one before last ) … BUCKETS

Corrected response – with correct spacing and capitalization:

Bucket Bucket Buck- et BUcket

It cracks me up that it looks like it’s psyching itself up when it fails.